News

- Poster Sessions detailed Information

- Workshop Location and Date: Topaz Concourse, Singapore Expo Singapore. 26 January 2026 8:30 AM to 5:00 PM

- We have updated the Tentative Schedule.

- Please follow us on Twitter for the latest news.

-

Submission Deadline:

22 October 2025 (AOE)Due to OpenReview outage we have extended the deadline to 23 October 2025 (AOE) -

Speaker List Updated... - AAAI Early Registeration deadline approaching 19 Nov 2025 (AoE), please visit Link

About

Call for Papers

Key Dates

-

Paper Deadline:

October 22, 2025 (AoE)October 23, 2025 (AoE) -

Notification:

November 5, 2025November 8, 2025, (AoE) - Camera-ready: Dec 1, 2025, (AoE) (Authors of accepted papers can deanonymize the paper and update the paper in openreview)

All deadlines follow the Anywhere on Earth (AoE) timezone.

Submission Site

Submit papers through the Deployable AI Workshop Submission Portal on OpenReview (Workshop Submission Portal)

Scope

We welcome contributions across a broad spectrum of topics, including but not limited to:

- Deployable AI: Concepts and Models

- Privacy-Preserving AI

- Language Models & Deployability

- Explainable and Interpretable AI

- Fairness and Ethics in AI

- AI models and social impact

- Trustworthy AI models

Submission Guidelines

- Short Papers: 4 pages

- Long Papers: 7 pages

- You will need to submit a 2-page review response pdf including the reviews. Please follow the AAAI-style format for review response also.

Tentative Schedule

This is the tentative schedule of the workshop. All slots are provided in local time.

Morning Session

| 09:00 AM - 09:05 AM | Introduction and Opening Remarks |

| 09:05 AM - 09:40 AM | Keynote 1- AI Capabilities vs. AI Deployment: Models and methods to fill the gap, Prof. Ramayya Krishnan, Carnegie Mellon University |

| 09:40 AM - 10:15 AM | Keynote 2- Towards Reliable Assistance: Safety and Security in Sequential Decision-Making, Pradeep Varakantham Singapore Management University |

| 10:15 AM - 10:25 AM | Oral Talk 1- DETNO: A DIFFUSION-ENHANCED TRANSFORMER NEURALOPERATOR FOR LONG-TERM TRAFFIC FORECASTING |

| 10:25 AM- 10:35 AM | Oral Talk 2- Dynamic Orthogonal Continual Fine-tuning for Mitigating Catastrophic Forgetting of LLMs |

| 10:35 AM - 11:30 AM | Break and Poster Session 1 |

| 11:30 AM - 11:40 AM | Oral Talk 3 - Alignment-Constrained Dynamic Pruning for LLMs: Identifying and Preserving Alignment-Critical Circuits |

| 11:40 AM - 11:50 AM | Oral Talk 4 - Efficient Multi-Model Orchestration for Self-Hosted Large Language Models |

| 11:50 AM - 12:00 AM | Oral Talk 5 - A Task-Level Explanation Framework for Meta-Learning Algorithms |

| 12:00 AM - 12:50 PM | Poster Session 2 |

| 12:50 PM - 2:00 PM | Lunch Break |

| 2:00 PM - 2:30 PM | Global North, South & the Future: A Fireside Chat on Responsible AI Prof. Gopal Ramchurn (Uni of Southampton, RAI UK), Prof. Balaraman Ravindran (CeRAI & WSAI, IIT Madras) |

| 2:30 PM - 3:00 PM | Keynote 3: Foundation Motion: Auto-Labeling and Reasoning about Spatial Movement in Videos, Boyi Li, Nvidia Research, UC Berkeley |

| 3:00 PM - 3:10 PM | Oral Talk 6 - Explainability Methods Can Be Biased: An Empirical Investigation of Gender Disparity in Post-hoc Methods |

| 3:10 PM - 4:05 PM | Break 2 and Poster Session 3 |

| 4:05 PM - 4:40 PM | Keynote 4 - Abstract: Finding supervision for complex tasks Pang Wei Koh University of Washington |

| 4:40 PM - 4:50 PM | Oral Talk 7- V-OCBF: Learning Safe Filters from Offline Data via Value-Guided Offline Control Barrier Functions |

| 4:50 PM - 5:00 PM | Oral Talk 8 - Quantifying Strategic Ambiguity in Corporate Language for AI-Driven Trading Strategies |

| 5:00 PM - 5:10 PM | Oral Talk 9 - Safe and Deployable LLM Adaptation: Directional Deviation Index–Guided Model Pruning |

| 5:10 - 5:15 PM | Closing Remarks |

Invited Speakers

Ramayya Krishnan

Carnegie Mellon University

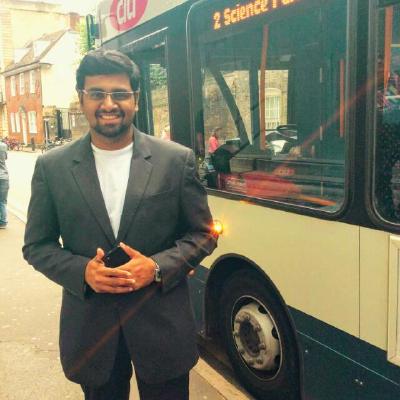

Pradeep Varakantham

Singapore Management University

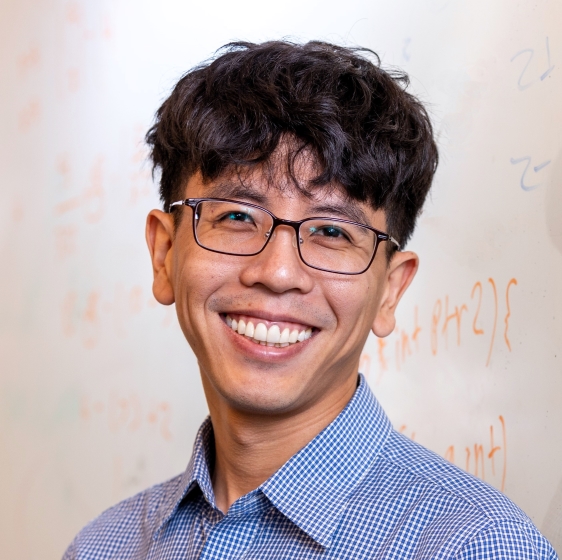

Pang Wei Koh

University of Washington

Boyi Li

NVIDIA Research, UC Berkeley

Workshop Organizers

Rahul Vashisht

IIT Madras

Program Committee

- Anuja Agrawal - Senior Staff Engineer and Researcher, Google

- Monica Sunkara – AWS Bedrock Agents, Science Leader

- Abdul Bakey Mir - Ph.D. Scholar, IIT Madras

- Srividhya Sethuraman – M.S. Scholar, IIT Madras

- Aayushman – Researcher, IIT Madras

- Shivangi Shreya – M.S. Scholar, IIT Madras

- Ritwiz Kamal – Ph.D. Scholar IIT Madras

- Nasibullah – Ph.D. Scholar, Aalto University

- Sonam – Researcher, International Business Machines(IBM)